Please Note:

The support ticket system is for technical questions and post-sale issues.

If you have pre-sale questions please use our chat feature or email information@mile2.com .

By Dr. Raymmond Friedman – November 24, 2025

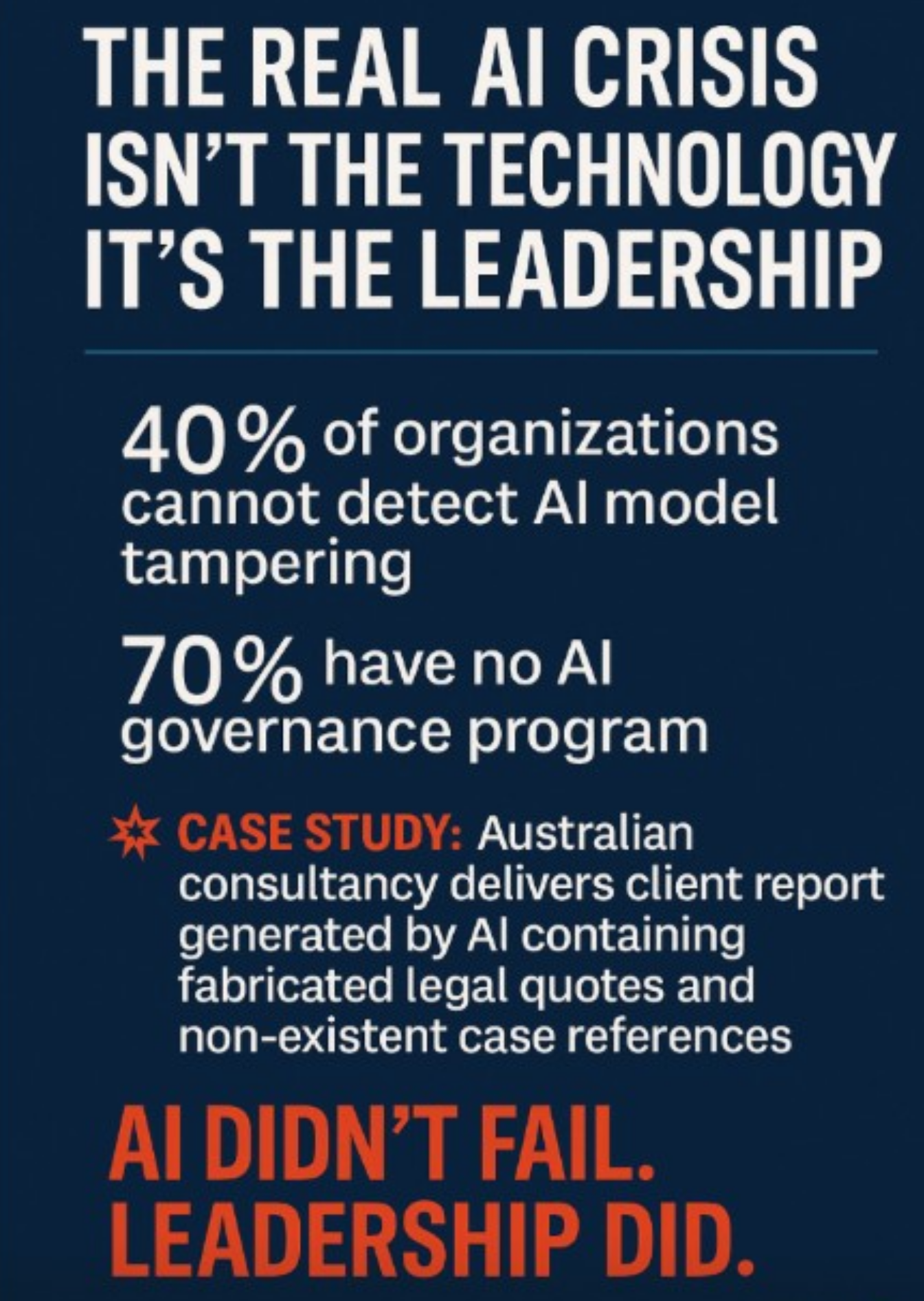

The Silent Crisis in AI Cybersecurity:

How Leadership Failures Are Now the #1 Organizational Risk

Artificial Intelligence is now embedded in everything — cybersecurity tooling, enterprise automation, public-sector systems, and even strategic decision-making. But while organizations are accelerating AI adoption, a dangerous truth has emerged:

The single greatest threat in the AI age is not the attacker.

It’s the leader who fails to govern AI responsibly.

According to a 2025 analysis, 40% of organizations cannot detect model tampering, and nearly 70% lack formal AI governance to prevent data leakage, poisoning, or unauthorized model modification.

Yet, more then 60% of C-Suite leaders report “low urgency” for AI security adoption.

This gap between threat reality and leadership awareness is now a systemic weakness.

Case Study: When Leadership Ignores AI Governance, the System Breaks

A recent incident in Australia exposed the consequences of leadership negligence:

Why did this happen?

Because the leadership team had no governance guardrails.

No validation workflow. No AI-output review policy. No ethical oversight. No transparency.

AI didn’t fail. Leadership failed.

The Cost of AI Misgovernance

When leadership fails to build maturity around AI usage, the consequences are tangible:

The formula is simple:

AI + No Governance = Catastrophic Risk.

Why Leadership Is the Fragile Point in the AI Era

1. Speed Over Security

Executives rapidly deploy AI while treating security as an add-on.

2. Delegation Without Ownership

AI decisions are pushed to tech teams, but governance must originate with leadership.

3. Blind Spots in Ethical & Operational AI Risk

Many leaders lack training in AI ethics, bias, drift, or hallucination risk.

4. Lack of Accountability Structure

Most boards do not require AI risk reporting or lifecycle oversight.

5. Cultural Immaturity

Organizations still treat AI as a productivity tool rather than a strategic risk surface.

Frameworks to Fix Leadership Failure (From my book “The Art of an Organizational Leader”)

Your book lays out principles that directly address these leadership failures.

1. The Principle of Foundational Integrity

AI security begins with leadership integrity — building governance before deployment.

2. The Accountability Structure Principle

Organizations must establish:

A system without owners is a system destined to be exploited.

3. The Principle of Transparent Decision-Making

AI must be explainable — leaders should demand:

Transparency eliminates systemic blindness.

4. Ethical Stewardship

Your book emphasizes values-driven leadership.

AI models should be trained and operated with:

• Ethics is not optional; it is a control.

5. Strategic Adaptability

Leaders must train continuously in:

In the AI era, adaptability determines survival.

Conclusion: The Crisis Is Not AI — It’s Leadership

AI is not inherently dangerous. Poor leadership is.

Leaders who fail to implement governance, integrity, transparency, accountability, and adaptability will see their organizations fall victim to AI-driven risks.

Those who rise to the challenge will define the next generation of resilient, intelligent, and ethical enterprises.

About the Author

Dr. Raymond Friedman

Author of The Art of an Organizational Leader

Architect of Mile2’s Certified Organizational Leadership Program

President of Mile2®

➡️ Follow Dr. Raymond Friedman for insights on AI governance, cybersecurity leadership, and the evolving ethics of intelligent defense.

The support ticket system is for technical questions and post-sale issues.

If you have pre-sale questions please use our chat feature or email information@mile2.com .